Introduction

In 2023, after this site had been live for a while and had collected a good amount of data, I trained a LORA model on the then top 100 images on Erorate.com. I wanted to see if using this LORA model to generate images would improve the Elo score of freshly generated images. This post will go over the results of that study. At the time of writing, the current highest scoring image is actually one of the images generated with an Erorate LORA model.

Understanding LORAs

LORA stands for “Low-Rank Adaptation,” a method designed to fine-tune large pre-trained models efficiently. Before LORAs, people used to fine-tune the entire model to add new concepts to the model. Needless to say, this was computationally expensive and time-consuming. LORAs changed the game by allowing you to fine-tune only a small part of the model, making the process much faster and more efficient. You can apply LORAs “on top” of a pre-trained model to adapt it to new data or tasks.

Erorate.com Top 100 LORA

So, I trained the LORA for 2500 steps on the top 100 images on Erorate.com. I then used this new LORA to generate sets of images with varying LORA weights. Weight of zero means that the LORA has no effect on the image, producing the same image from the prompt as the base model would. As the weight increases, the LORA has more and more effect on the image. I picked the weights of 0.0, 0.25, 0.50, 0.75, and 1.0 to generate the images. In total I generated 400 images. Sure, it’s not a huge dataset, but I didn’t want to spam the site with thousands of very similar images.

Key Findings

Significant Impact of LORA Weights

Fast forward to 2024, these images have been part in over two hundred and fifty thousand duels, so the Elo scores should be pretty stable by now. I analyzed the data to see if the LORA weights made any difference in the Elo scores.

LORA Weight Comparison

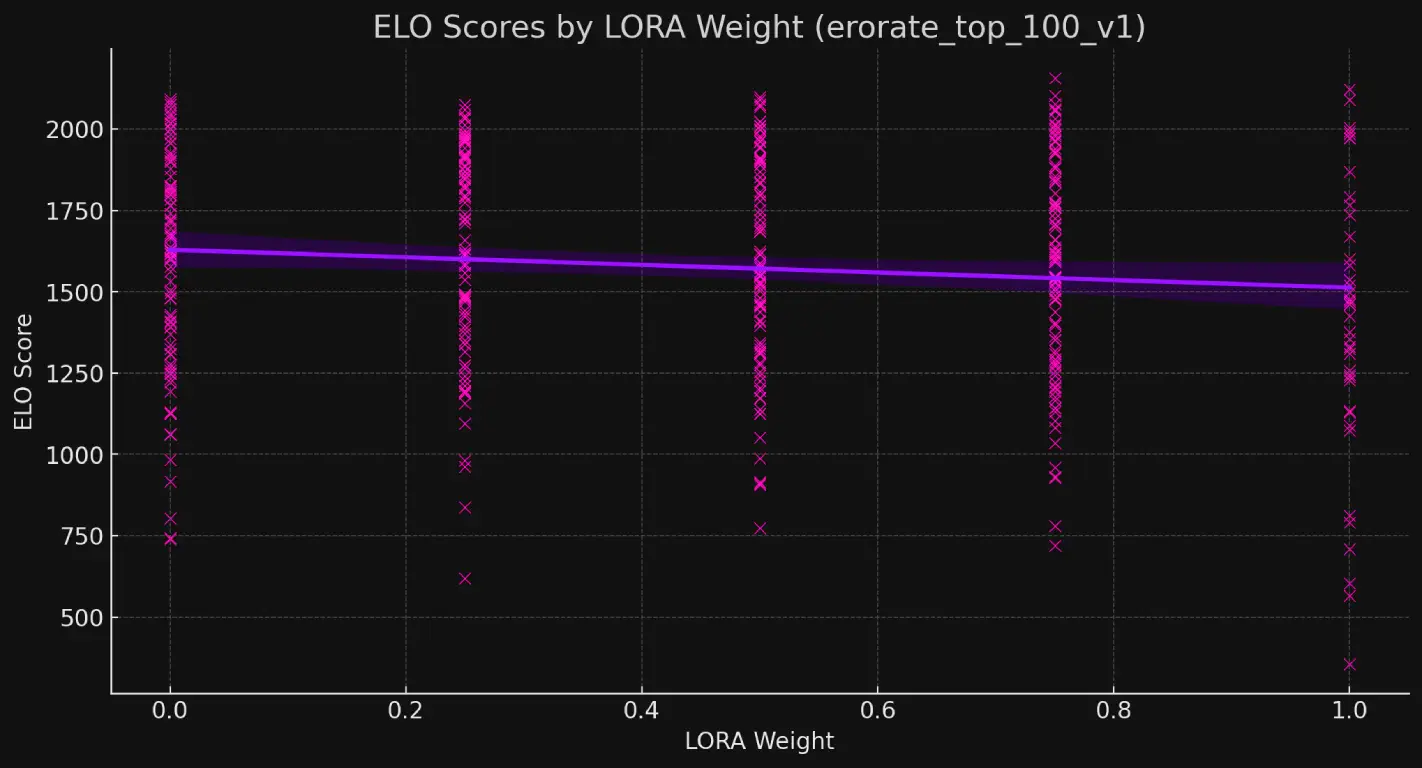

The following graph shows the distribution of Elo scores for images generated with different LORA weights:

Zero Weight (No LORA Effect):

- Mean Elo Score: 1586.27

- Standard Deviation: 337.54

Non-Zero Weights:

- LORA Weight 0.25:

- Mean Elo Score: 1630.30

- Standard Deviation: 328.24

- LORA Weight 0.50:

- Mean Elo Score: 1592.96

- Standard Deviation: 331.66

- LORA Weight 0.75:

- Mean Elo Score: 1575.36

- Standard Deviation: 334.90

- LORA Weight 1.00:

- Mean Elo Score: 1412.98

- Standard Deviation: 436.40

- LORA Weight 0.25:

Key Observations

- Higher Mean Scores: Images generated with LORA weights of 0.25 and 0.50 had slightly higher mean Elo scores compared to those generated with a weight of 0.0 (no LORA effect). This suggests that using LORA can indeed enhance the quality of the generated images, though the improvement is modest.

- Tighter Distribution: The standard deviation of Elo scores for LORA weights 0.25 and 0.50 was slightly lower than that of the zero weight. This indicates a tighter distribution of scores, meaning these images had more consistent quality.

- Optimal Weight Range: The weight of 0.50 produced a mean score close to that of zero weight but with marginally better consistency. This suggests that applying a moderate amount of LORA effect can lead to slightly better image quality.

- Diminishing Returns: The mean Elo score dropped significantly for the weight of 1.00. This is highly dependent on the dataset and how the LORA has been trained. A weight of 1.00 is not optimal for this LORA. Any LORA will eventually degrade the images if the weight is increased too much.

Conclusion

From this study, it is evident that applying LORA can have a noticeable, though modest, impact on the quality of AI-generated images as measured by Elo scores. Using LORA weights around 0.25 to 0.50 can slightly enhance the images’ rankings, while increasing the weight too much (like 1.00) can significantly degrade image quality.

This experiment highlights the importance of fine-tuning and carefully selecting LORA weights to achieve the best results. It also underscores that while LORAs are a powerful tool for improving AI-generated content, they need to be used judiciously to avoid diminishing returns.

In the future, I plan to explore other variables and techniques that could further enhance the quality of AI-generated images. Until then, happy generating!

Random observation

When assembling the dataset, I noticed two images that were generated with a negative LORA weight. I didn’t include them in the dataset, but I did notice that their Elo scores were abysmal. Sample size of two is not enough to draw any conclusions, but perhaps there’s potential to for training a LORA on the bad images, using that with negative weight to generate good images. It’s an interesting thought experiment at the very least.

Dataset

You don’t need to scrape the site to get the dataset if you want to conduct your own experiments. Just hit me up on Discord or Twitter, and I’ll generate a fresh version of the dataset for you.